Introduction

As much as technology actors are preaching technology and vendor neutrality, inclusive, gender parity and racial recognition movements have lashed at tech giants for embedding racism in AI and algorithms. This is what is coined as the new Jim code, extending from the Jim Crow laws of slavery and racism.

Racial prejudices.

In her 2019 acclaimed critique: Race After Technology: Abolitionist Tools for the New Jim Code, Ruha Benjamin critiques in the barest the ways, the nuanced ways in which modern technology creates, supports, and amplifies racism and white supremacy. From AI-judged beauty contests to genetic and biometric testing, AI seems to be embedded underlying tones of racial prejudices.

As technology becomes increasingly ubiquitous and troubling tech incidents grow in number (like Google Photo labeling Black people as gorillas), more people are questioning the exalted status of technology in society. However, these incidents are still frequently perceived as ‘one-offs’ rather than symptoms of an underlying malaise.

These coded biases make it difficult, even if consumers try to, disregard the topic of racism. The first point of departure is what is racism. Dr Benjamin alludes to the fact that racism can occur without a primary intention to harm. To think of technology as comparison to laws, we can assume that an embedding of racism can exist, as much as it can exists in a country law, to prejudices, disadvantage.

The digital realm.

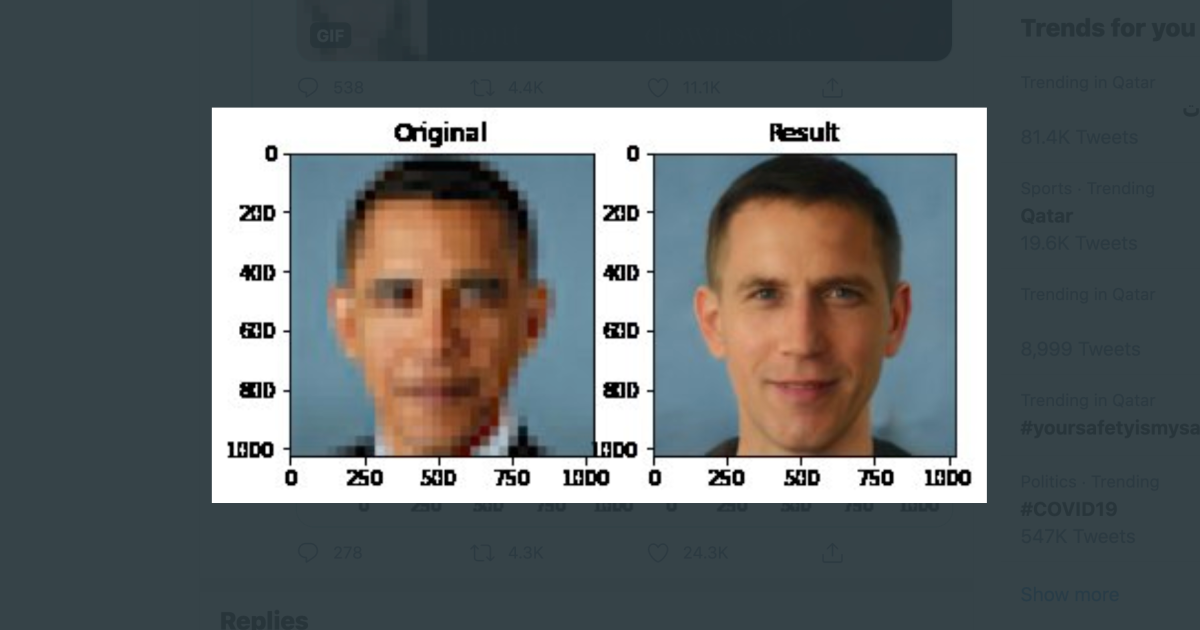

The author of a technology, in this case web technology might not necessarily sit down and craft a code or IA algorithm that they primarily intend to be racist, but they might ignore key factors in their UI/UX design, making the end user product discriminatory in nature. The techno altruism for example, might have designed AI to stratify surveillance for a good cause. But because algorithms like those which power facial recognition grow, they tend to segregate against minorities in the digital realm.

The argument.

How do we as designers and consumers reclaim our agency in the digital ecospheres? Dr. Benjamin, an Associate Professor of African American Studies at Princeton University and founder of the Just Data Lab brings to light how the topics of racism are brought into the digital ecospheres. It’s time for Tech to stop being racist. Darker skin absorbs lighter and therefore reflects less light, making it more difficult to detect with optical sensors and image processing software., they say. Some tech giants like Facebook have defended their soap dispensing machines as temperaturist since they all use infrared which measures heat.

Scholars studying the intersection of technology and society, and those in technical fields looking for an outside critical understanding of their own work, will find it an important jumping-off point or a valuable resource to deepen their knowledge on understanding racism within their fields. Dr Benjamin’s work is more urgent and relevant than ever, given social reckonings with Black understandings of systemic racism following the murder of George Floyd in May 2020.

Racial prejudices.

Instead of a revolutionary new theory, its major contribution may be in exposing these ideas to a broader audience and as a catalyst for deeper examinations of the technologies and situations that Benjamin touches on.

Someone who has no experience in creating, and testing, hardware and software, defaults to racism. Here is another theory. They either did not come up with enough testing scenarios or they did not include enough test data (or correct testing data) to ensure all skin tones were handled under different conditions which can contain an astronomical number of variables. To think that racism is somehow included in the developing of a product is not foolish. It is too expensive to fix an issue after it has been released, and it would be embarrassing to the team that missed such an obvious thing to test for. Although the hardware/software tech community is becoming more diverse, I think there is more room for improvement in terms of testing variables for future iterations.

Benjamin questions the problematic idea that new technologies are morally superior to humans, due to their neutrality, objectivity, and lack of bias. Benjamin suggests that this popular discourse allows racism to reign through technologies with a lack of accountability for the systems and designers. She explains that the ‘New Jim Code’ is the implementation of new technologies that reflect and reproduce existing inequities, often promoted as more progressive than discriminatory systems of the past.

Bibliography

Benjamin, R., 2019. Race after technology: Abolitionist tools for the new Jim code. Social Forces.

Garrett, P.M., 2021. Books Review: Ruha Benjamin Race After Technology: Abolition Tools for a New Jim Code.

Ko, A.J., 2021. Book review: Race after Technology: Abolitionist Tools for the New Jim Code.

The Radical AI Podcast: Love, Challenge, and Hope: Building a Movement to Dismantle the New Jim Code’. Available at: https://podcasts.apple.com/us/podcast/love-challenge-hope-building-movement-to-dismantle/id1505229145?i=1000473742475 (Accessed: 17 June 2021).